OpenAI Moderation

Overview

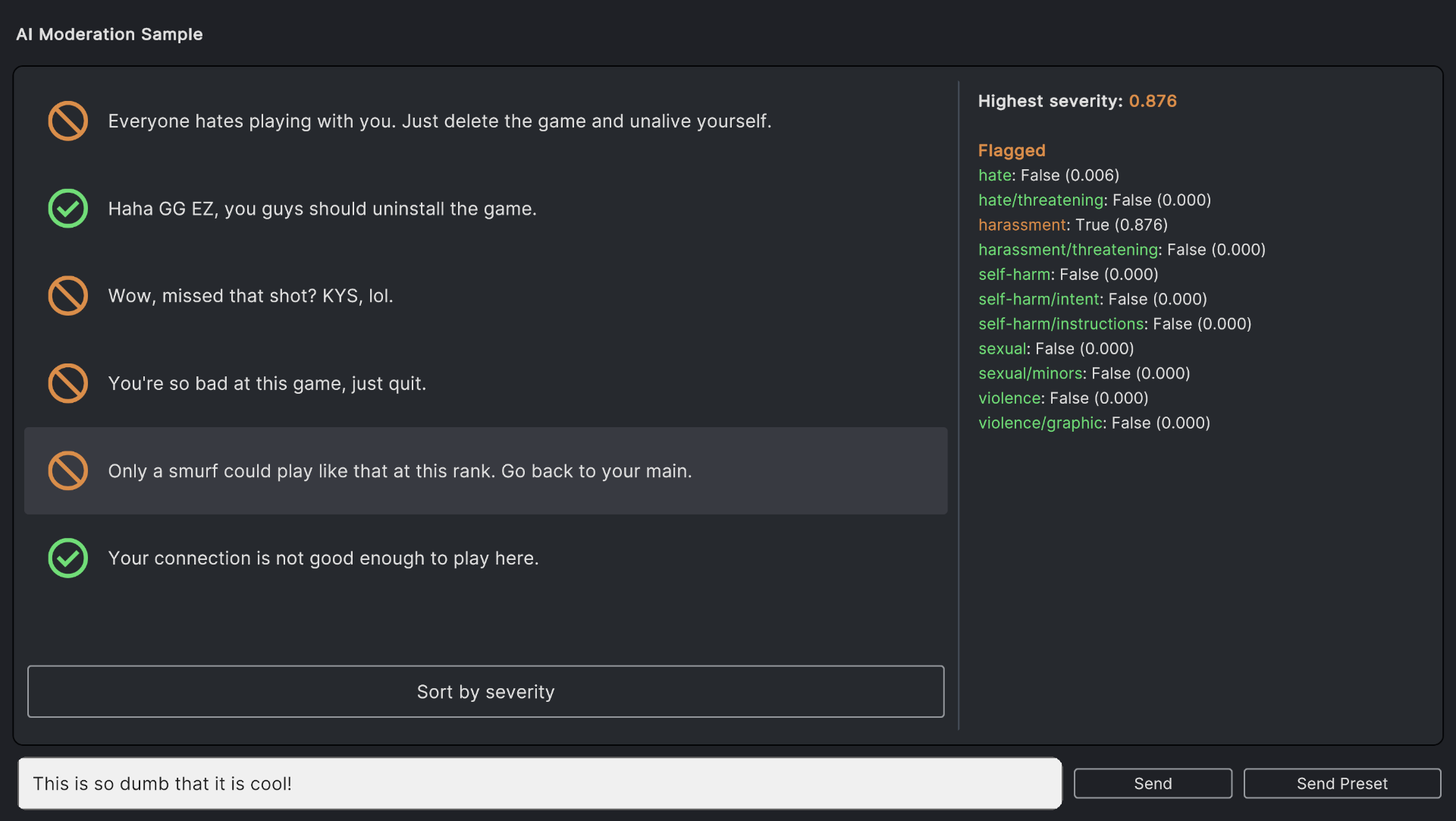

Moderation API is a service that uses machine learning to detect and filter inappropriate content in text. It can be used to moderate user-generated content, such as comments, nicknames, and messages, to ensure that it complies with community guidelines and legal requirements.

Using a pre-compiled offline vocabulary is great for filtering the offensive content, but it can be not enough. The AI Moderation service is a great addition to the ChatGPT API, as it can help to filter out the inappropriate content that is not in the vocabulary. Especially, AI detects the offensive context even if there are no offending, hate or slur words used.

Categories

The denominators under which the filtered content is categorized are as follows:

| Category | Description |

|---|---|

hate |

Content that expresses, incites, or promotes hate based on race, gender, ethnicity, religion, nationality, sexual orientation, disability status, or caste. Hateful content aimed at non-protected groups (e.g., chess players) is harrassment. |

hate/threatening |

Hateful content that also includes violence or serious harm towards the targeted group based on race, gender, ethnicity, religion, nationality, sexual orientation, disability status, or caste. |

harassment |

Content that expresses, incites, or promotes harassing language towards any target. |

harassment/threatening |

Harassment content that also includes violence or serious harm towards any target. |

self-harm |

Content that promotes, encourages, or depicts acts of self-harm, such as suicide, cutting, and eating disorders. |

self-harm/intent |

Content where the speaker expresses that they are engaging or intend to engage in acts of self-harm, such as suicide, cutting, and eating disorders. |

self-harm/instructions |

Content that encourages performing acts of self-harm, such as suicide, cutting, and eating disorders, or that gives instructions or advice on how to commit such acts. |

sexual |

CContent meant to arouse sexual excitement, such as the description of sexual activity, or that promotes sexual services (excluding sex education and wellness). |

sexual/minors |

Sexual content that includes an individual who is under 18 years old. |

violence |

Content that depicts death, violence, or physical injury. |

violence/graphic |

Content that depicts death, violence, or physical injury in graphic detail. |

In the AI Moderation Chat Demo Scene the triggered categories are highlighted in the stats on the right side of the chatbox.

For more information on the moderation please refer to the OpenAI documentation.

AI Moderation Demo Scene

We included the demo scene that shows the moderation in action using a chatbox. You can type in your phrases to get verified or send pre-saved ones. To start the demo, please press Play.

AI Moderation Demo Scene

For higher accuracy, try splitting long pieces of text into smaller chunks each less than 2,000 characters.

Quick Start

To use Moderation in code instead of the component above, you can use the static methods of the Moderation class.

Request Method

This method is used to request moderation of the input text.

public static Action Request(

string text,

ModerationParameters parameters,

Action<ModerationResponse> completeCallback,

Action<long, string> failureCallback

)

Parameters:

text: The input text to check for toxicity.parameters: The parameters for the moderation request.completeCallback: The callback to be called when the request is completed.failureCallback: The callback to be called when the request fails.

CancelAllRequests Method

This method is used to cancel all pending moderation requests.

public static void CancelAllRequests()

Pricing

The Moderation API is free to use for most developers. Please refer to the OpenAI pricing page for more information.

Having Issues?

If you have any questions or need help with the Moderation functionality in AI Toolbox, please contact us.

Comments