Set up Ollama

With Ollama you can run popular LLMs like Llama, Gemma, Qwen and dozens of others locally for free!

Ollama Setup

- Open AI Toolbox Settings window (Edit ▶︎ Project Settings ▶︎ AI Toolbox ▶︎ ‘Ollama’ tab).

- Install the Ollama application locally on your machine: use Download at ollama.com button to download the Ollama app. Make sure the app is running after installation.

- Install at least one Ollama model, either manually from the Ollama website, or using the Terminal command

ollama run <model_name>. For example, to install the llama 3.2 model, use the commandollama run llama3.2. - Test the connection using the Test Connection button.

Once you add any additional model, please click the Refresh installed models button to update the list of available models in the dropdown.

To remove a model, use the Terminal command ollama rm <model_name>.

Selecting Ollama Chatbot in AI Toolbox

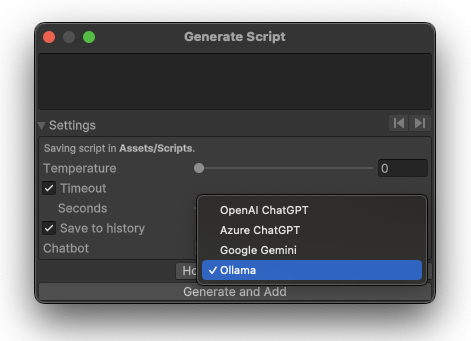

Selecting Ollama Chatbot in the Code Generation Window

- Open the Generate Component window: Window ▶︎ AI Toolbox ▶︎ Generate …. To open Generate Script window, you can also use the shortcut:

Ctrl+Shift+S(Windows) orCmd+Shift+S(Mac). - Expand Settings menu on the bottom of the window.

- Select Ollama in the Chatbot dropdown.

- If you have more than one Ollama model installed, you can select the desired model in the AI Toolbox Settings (Edit → Project Settings → AI Toolbox → ‘Ollama’ tab).

Ollama selected as the backend in the ‘Generate Component’ window.

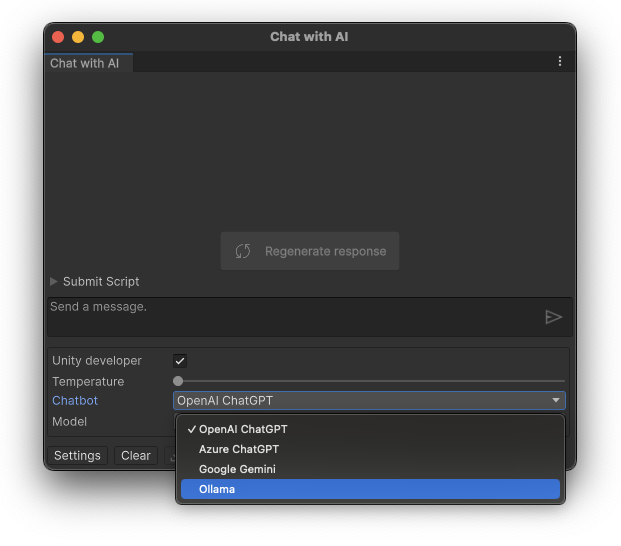

Selecting Ollama Chatbot in the Chat with AI Window

- Open the Chat with AI window: Window ▶︎ AI Toolbox ▶︎ Chat with AI, or use the shortcut:

Alt+Shift+C(Windows) orOption+Shift+C(Mac). - Click the Settings button on the bottom of the window.

- Select Ollama in the Chatbot dropdown.

- If you have more than one Ollama model installed, you can select the desired model in the Model dropdown.

Ollama selected as the backend in the ‘Chat with AI’ window.

Ollama Models

The wide range of LLMs available in Ollama can be used for code generation, code explanation, and chatting with your AI assistant. Ollama makes it easy to download, manage, and run these models on your own machine. The list of the available LLMs is available at the Ollama website.

Some popular models available in Ollama:

- DeepSeek-V3: A powerful Mixture-of-Experts model that is frequently cited for its superior logic and bug-fixing capabilities. It outperforms many proprietary models on the LiveCodeBench benchmark.

- Llama 3.2: A powerful open-source model developed by Meta, known for its strong performance in various NLP tasks.

- Gemma: A versatile model designed for a wide range of applications, including code generation and natural language understanding.

- Qwen: A model optimized for conversational AI, excelling in generating human-like responses.

- Mistral: A high-performance model that balances speed and accuracy, suitable for real-time applications.

- Alpaca: A fine-tuned version of LLaMA, optimized for instruction-following tasks.

Comments